I received a targeted advertisement on LinkedIn yesterday for JenniAI – an Generative AI (GenAI) writing tool (I guess my constant Googling about AI tools has resulted in the cause of this!).

JenniAI boasts various features for academic writing, such as a ‘Thesis Statement Generator’, ‘Paraphrasing Tool’ and ‘AI Essay Outline Generation’ (https://jenni.ai/about).

The reason why I’m writing about it is because the ad tagline ‘Don’t outsource your critical thinking’:

It is ironic because one of the biggest concerns of educators is the use of GenAI tools for students to summarise articles or books, look up information and even write for them will reduce their ability to think critically (e.g. Cong-Lem et al., 2024; Dong et al., 2024).

In my own MA dissertation research on English for Academic Purposes (EAP) practitioners across the UK, 40% thought that it would have a negative or strongly negative impact on students critical thinking skills (and around 31% were unsure of what impact it might have).

Although GenAI may be having an impact on students critical thinking, this isn’t a new concern in the academic writing field, as students may often struggle with criticality in their academic writing (Wingate, 2012).

What do we even mean by ‘critical thinking’?

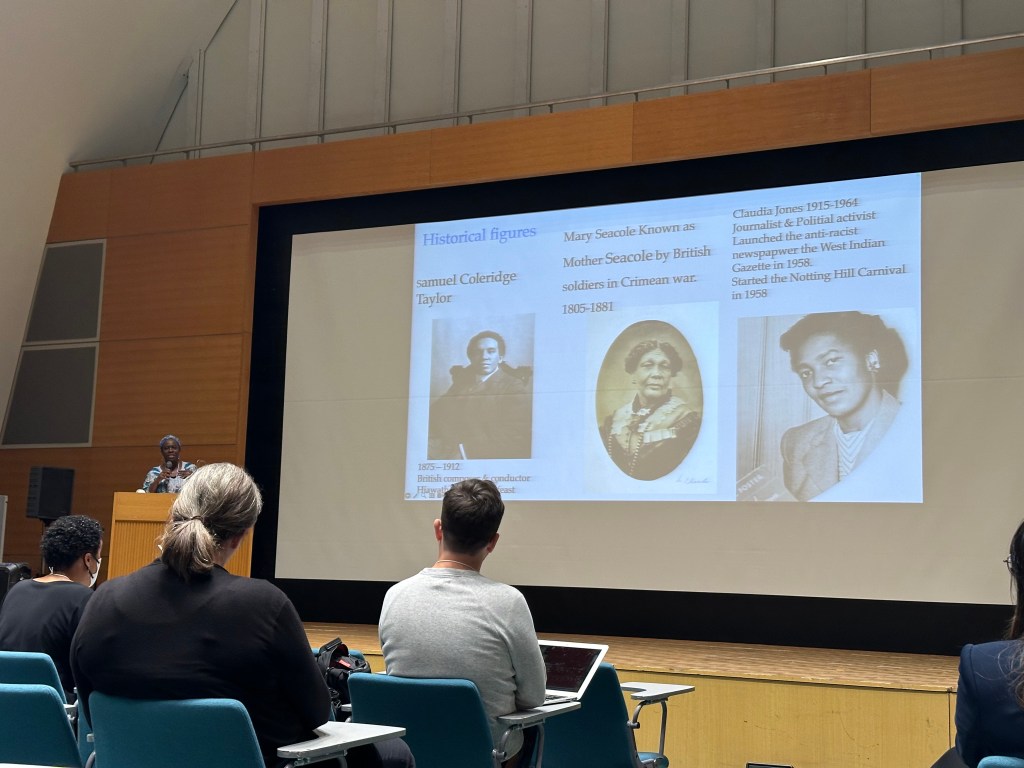

Larson et al. (2024) identify two distinct ways this term is used in the education literature which I find helpful to distinguish:

- The first is the ‘individual’ – how as individuals we use logical thinking to avoid cognitive biases and analyse information to see if it supports claims or beliefs.

- The second is the ‘social‘ – originating in sociology, it focuses on prevailing social norms and our ability to question them. We are reflecting in order to notice injustices and challenge orthodoxies.

Critical use of GenAI must involve both kinds of critical thinking. We need to analyse the output from GenAI on whether it is accurate, justified and coherent. We also need to be wary of the biases from the programmers who wrote the algorithms GenAI is based on and the biases inherent in the training data which the GenAI relies on to produce its output.

Back to JenniAI… do I think it can really help students to do their own critical thinking as the advertisement would like us to believe?

Well, compared to the default ChatGPT which authoritatively outputs lots of text when prompted, JenniAI writes along with the user and keeps nudging the users input in order to adjust the text being generated. This means the user is a bit more active and involved as the text itself is generated. But I think with any GenAI tool, the approach you take and analysing the output and fact-checking is more important for being critical. As a student, you could use JenniAI uncritically to summarise all of your readings and generate your whole written assignment.

So far, GenAI hasn’t built in these guardrails for students. You can attempt to create custom GPTs (a feature available to paid ChatGPT users) to add extra prompts to the chatbot (like asking it to not tell students the answer, but to guide them to come up with the answer themselves). This may mitigate some of those issues, but it doesn’t replace good pedagogical choices when teaching students about GenAI.

I think the real answer is to develop AI literacy in educators through continued professional development. There will not be a magical AI tool that teaches our learners to be critical. As teachers, we need to use our own AI literacy to pass this knowledge on to our learners through activities which develop students’ critical use of these GenAI tools. We need to make sure students know how GenAI works (as a large language model based on statistical methods of generating text… not as a database of factual information) and how to fact-check sources and look at output with a critical eye.

References:

Cong-Lem, N., Tran, T.N. and Nguyen, T.T. (2024) ‘Academic Integrity In The Age Of Generative AI: Perceptions And Responses Of Vietnamese EFL Teachers’, Teaching English With Technology, 2024(1). Available at: https://doi.org/10.56297/FSYB3031/MXNB7567.

Dong, B., Bai, J., Xu, T. and Zhou, Y. (2024) ‘Large Language Models in Education: A Systematic Review’, in 2024 6th International Conference on Computer Science and Technologies in Education (CSTE). 2024 6th International Conference on Computer Science and Technologies in Education (CSTE), Xi’an, China: IEEE, pp. 131–134. Available at: https://doi.org/10.1109/CSTE62025.2024.00031.

Larson, B.Z., Moser, C., Caza, A., Muehlfeld, K. and Colombo, L.A. (2024) ‘Critical Thinking in the Age of Generative AI’, Academy of Management Learning & Education, 23(3), pp. 373–378. Available at: https://doi.org/10.5465/amle.2024.0338.

Slinn-Funabashi, R.L.M. (2024) ‘Generative AI in Academic Writing: English for Academic Purposes Practitioners Perceptions and Teaching Practice’ [Unpublished Master’s Dissertation] p.38.

Wingate, U. (2012). ‘”Argument!” helping students understand what essay writing is about’, Journal of English for Academic Purposes, 11(2), pp. 145–154. https://doi.org/10.1016/j.jeap.2011.11.001.

Leave a comment